Text Summarization

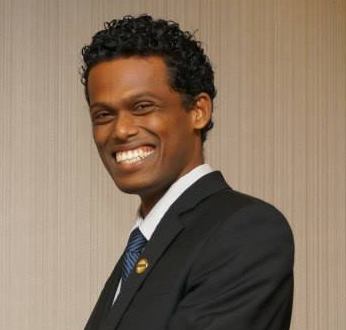

Principal Investigator: Nisansa de Silva

We propose to advance automatic summarization and generation techniques, with a focus on aspect-aware methods that capture fine-grained information and sentiment within concise textual representations.

Automatic summarization and text generation are crucial in natural language processing as they reduce long or complex texts into coherent, concise outputs that preserve the most salient information. This project aims to extend these methods by incorporating aspect-based sentiment analysis principles into summarization and generation tasks.

We explore deep hybrid models and transfer learning approaches to generate accurate, aspect-aware summaries, abstracts, and headlines. Special emphasis is placed on multi-document summarization, where information needs to be aggregated from multiple sources without losing coherence or sentiment orientation. Comparative evaluations are conducted to measure the effectiveness of different modeling strategies, ranging from neural architectures to adapter-based fine-tuning of large pre-trained models.

In addition, we aim to create and share multilingual and low-resource datasets that enable experimentation in summarization and sentiment-aware text generation. By combining innovations in modeling, evaluation, and data curation, this work contributes toward the development of summarization systems that can generate more nuanced and aspect-sensitive textual representations, supporting broader advances in natural language understanding.

Objectives:

- Investigate neural and hybrid approaches for generating structured summaries and condensed representations of long or complex texts.

- Develop multi-document summarization methods capable of integrating information from diverse sources into coherent outputs.

- Explore transfer learning and adapter-based fine-tuning techniques to adapt large pre-trained models for summarization tasks.

- Evaluate and benchmark summarization models across different methods (rule-based, neural, and hybrid) to identify performance trade-offs.

- Build multilingual and low-resource datasets to support experimentation in summarization and text generation.

- Advance automatic headline and abstract generation models that can capture both content and sentiment-linked aspects effectively.

Keywords: Natural Language Processing | Machine Learning / Deep Learning | Sinhala | Multi-document summarization | Pre-trained Models |

Publications

Theses: MSc Minor Component Research

Pubudu Cooray, "Headline Generation for Sinhala Newspaper Articles using Pre-trained Language Models", University of Moratuwa, 2025

Dushan Kumarasinghe, "Automatic Generation of Research Paper Abstracts using Deep-Hybrid Models", University of Moratuwa, 2025

Kushan Hewapathirana, "Towards Multi-document Summarisation in Low-resource Settings", University of Moratuwa, 2025

Conference Papers

Kushan Hewapathirana, Nisansa de Silva, and C D Athuraliya, "M2DS: Multilingual Dataset for Multi-document Summarisation", in International Conference on Computational Collective Intelligence, 2024, pp. 219--231. doi: 10.1007/978-3-031-70248-8_17

Kushan Hewapathirana, Nisansa de Silva, and C D Athuraliya, "Multi-Document Summarization: A Comparative Evaluation", in 2023 IEEE 17th International Conference on Industrial and Information Systems (ICIIS), 2023, pp. 19--24. doi: 10.1109/ICIIS58898.2023.10253581

Dushan Kumarasinghe and Nisansa de Silva, "Automatic Generation of Abstracts for Research Papers", in Proceedings of the 34th Conference on Computational Linguistics and Speech Processing (ROCLING 2022), 2022, pp. 221--229.

Extended Abstracts

Kushan Hewapathirana, Nisansa de Silva, and C. D. Athuraliya, "Adapter-based Fine-tuning for PRIMERA", in 1st Applied Data Science \& Artificial Intelligence Symposium, April. 2025.

Team

External Collaborators: | C D Athuraliya |