Headline Generation for Sinhala Newspaper Articles using Pre-trained Language Models

Advised by: Surangika Ranathunga, Nisansa de Silva

University of Moratuwa

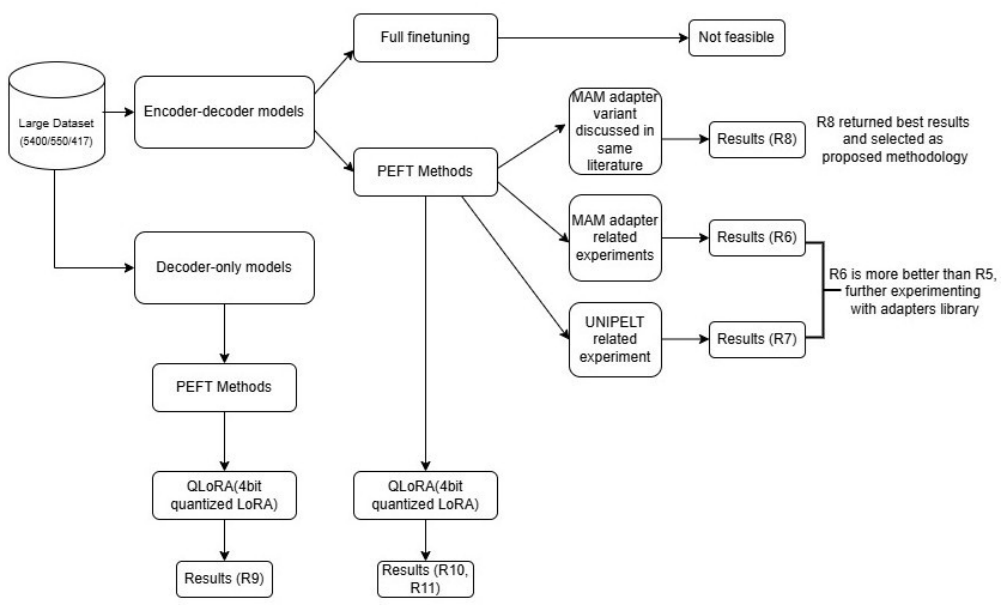

Automatic headline generation for Sinhala presents a significant challenge due to the language's unique characteristics and the limited resources available for its natural language processing (NLP). This research addresses this critical gap by utilizing advanced models such as mBART, mT5, and Llama 3 to generate accurate, contextually relevant headlines for Sinhala news articles. The research utilizes parameter-efficient fine-tuning (PEFT) methods, including adapters for feed-forward networks and attention layers, to improve performance and computational efficiency. The models are evaluated across four diverse Sinhala news datasets, and the findings indicate that mBART outperforms both mT5 and Llama 3 in generating concise and coherent headlines, especially for structured datasets. This demonstrates mBART's robustness in handling the complexities of Sinhala. However, while mBART excels with formal content, it struggles with informal datasets like Hiru and Gossip Lanka, highlighting the need for further refinement and specialized techniques to handle the diverse linguistic features of these sources. Overall, this work not only advances automatic headline generation for Sinhala but also makes a valuable contribution to the broader field of NLP, addressing the challenges posed by low-resource languages and the varying content styles they encompass.

Keywords: Natural Language Processing | Machine Learning / Deep Learning | Sinhala | Headline Generation | Text Summarization | Text Generation | LLM |