Adapter-based Fine-tuning for PRIMERA

1st Applied Data Science \& Artificial Intelligence Symposium

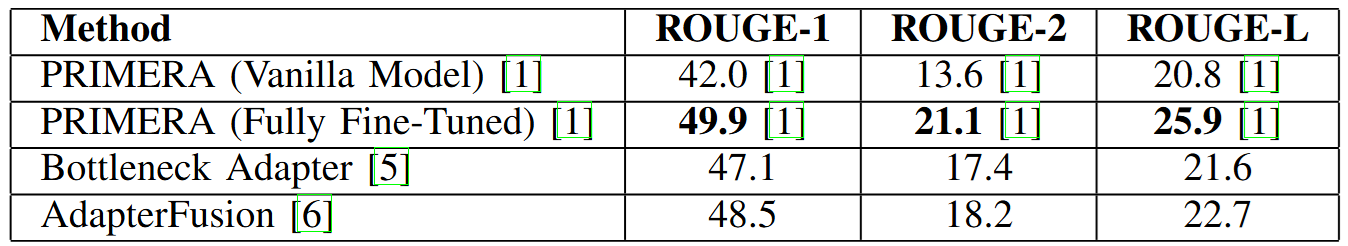

Multi-document summarisation (MDS) involves generating concise summaries from clusters of related documents. PRIMERA (Pyramid-based Masked Sentence Pre-training for Multi-document Summarisation) is a pre-trained model specifically designed for MDS, utilizing the LED architecture to handle long sequences effectively. Despite its capabilities, fine-tuning PRIMERA for specific tasks remains resource-intensive. To mitigate this, we explore the integration of adapter modules-small, trainable components inserted within transformer layers-that allow models to adapt to new tasks by updating only a fraction of the parameters, thereby reducing computational requirements.

Keywords: Natural Language Processing | Machine Learning / Deep Learning | Multi-document summarization | Pre-trained Models | Adapters |