Multi-Domain Neural Machine Translation with Knowledge Distillation For Low Resource Languages

Advised by: Surangika Ranathunga, Nisansa de Silva

University of Moratuwa

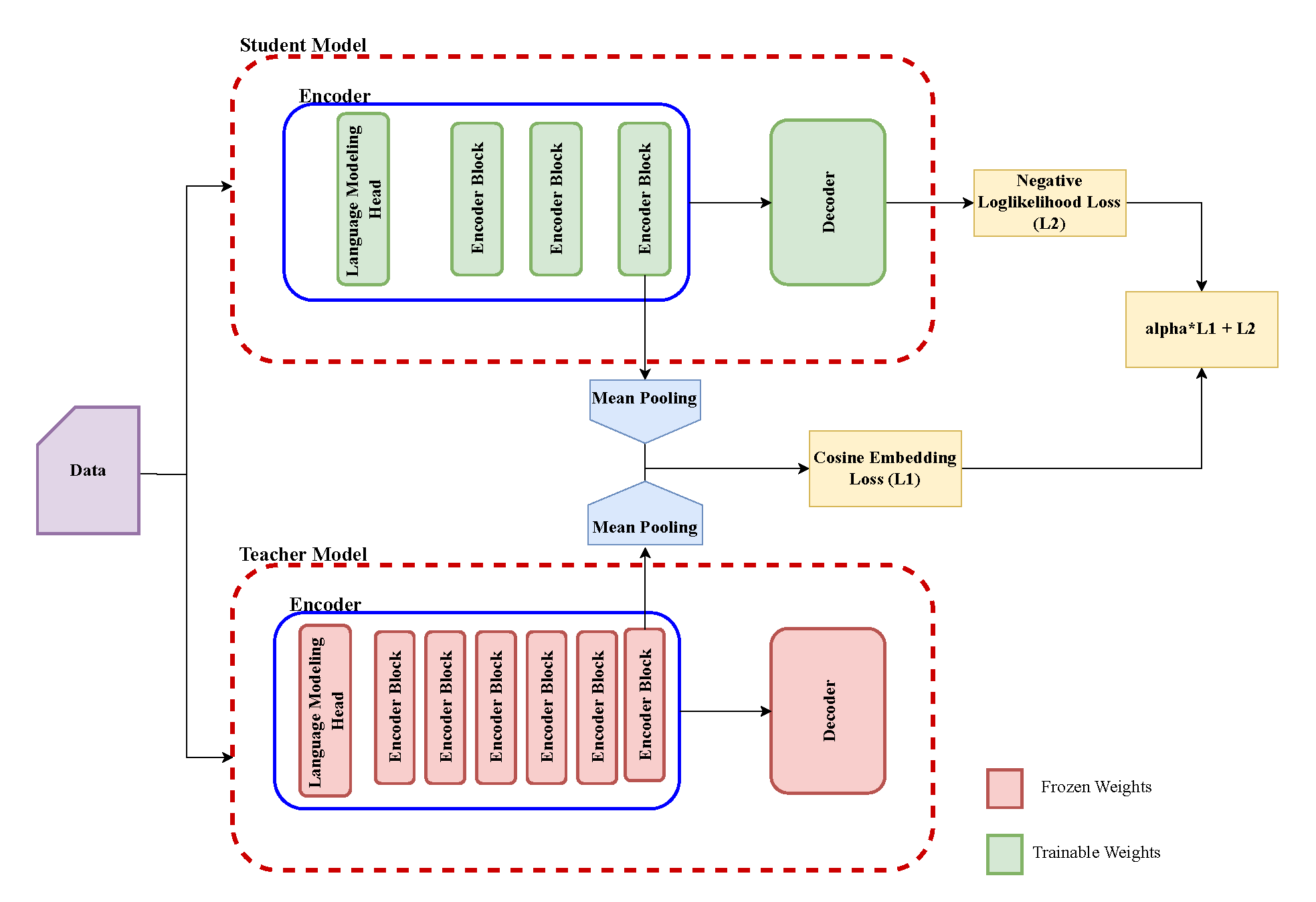

Multi-domain adaptation in Neural Machine Translation (NMT) is crucial for ensuring high-quality translations across diverse domains. Traditional fine-tuning approaches, while effective, become impractical as the number of domains increases, leading to high computational costs, space complexity, and catastrophic forgetting. Knowledge Distillation (KD) offers a scalable alternative by training a compact model using distilled data from a larger teacher model. However, we hypothesize that sequence-level KD primarily distills the decoder while neglecting encoder knowledge transfer, resulting in suboptimal adaptation and generalization, particularly in low-resource language settings where both data and computational resources are constrained. To address this, we propose an improved sequence-level distillation framework enhanced with encoder alignment using cosine similarity-based loss. Our approach ensures that the student model captures both encoder and decoder knowledge, mitigating the limitations of conventional KD. We evaluate our method on multi-domain German-English translation under simulated low-resource conditions and further extend the evaluation to a bona fide low-resource language, demonstrating the method's robustness across diverse data conditions. Results demonstrate that our proposed encoder-aligned student model can even outperform its larger teacher models, achieving strong generalization across domains. Additionally, our method enables efficient domain adaptation when fine-tuned on new domains, surpassing existing KD-based approaches. These findings establish encoder alignment as a crucial component for effective knowledge transfer in multi-domain NMT, with significant implications for scalable and resource-efficient domain adaptation.

Keywords: Natural Language Processing | Machine Learning / Deep Learning | Sinhala | Neural Machine Translation | Knowledge Distillation | Multi-domain Adaptation | Low-resource Languages | Encoder Alignment | Sequence-level Distillation |