Automatic Analysis of App Reviews Using LLMs

Proceedings of the Conference on Agents and Artificial Intelligence

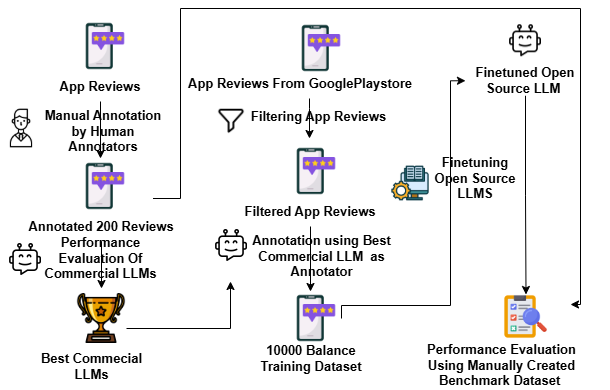

Large Language Models (LLMs) have shown promise in various natural language processing tasks, but their effectiveness for app review classification to support software evolution remains unexplored. This study evaluates commercial and open-source LLMs for classifying mobile app reviews into bug reports, feature requests, user experiences, and ratings. We compare the zero-shot performance of GPT-3.5 and Gemini Pro 1.0, finding that GPT-3.5 achieves superior results with an F1 score of 0.849. We then use GPT-3.5 to autonomously annotate a dataset for fine-tuning smaller open-source models. Experiments with Llama 2 and Mistral show that instruction fine-tuning significantly improves performance, with results approaching commercial models. We investigate the trade-off between training data size and the number of epochs, demonstrating that comparable results can be achieved with smaller datasets and increased training iterations. Additionally, we explore the impact of different prompting strategies on model performance. Our work demonstrates the potential of LLMs to enhance app review analysis for software engineering while highlighting areas for further improvement in open-source alternatives.

Keywords: Natural Language Processing | Machine Learning / Deep Learning | Mobile App Review Analysis | LLM | App Review Analysis | Mobile Applications | Software Evolution | GPT | Fine-tuning | Review Classification | Zero-Shot Learning | Open-Source Models |